All images by Zachary Tang for RICE Media unless otherwise stated.

For some time now, it has been possible for artificial intelligence (AI) to sound human. But what happens when it sounds too human?

With the right software, anyone can use AI to reproduce the complexities of a human voice. That concept is not new – most of us have used enough GPS apps, Google Translate, or Siri to know that it’s a thing.

What is relatively novel is that you can also generate AI recordings to sound specifically like anyone you know. It’s called an ‘audio deepfake’.

It’s not perfect, but it’s powerful. It can read any user-generated text using a chosen voice (text-to-speech). It can also mimic a person’s accent, tone, and affect with impressive precision.

In short, it can give anyone, and everyone, the ability to slip into new identities.

Here’s an example. I created a handful of audio clips using open-source tools available on the internet. It was shockingly easy. Can you guess who these bots are impersonating?

Not too bad, right?

Some of these synthetic audio generators have become so effective that they don’t seem so entertaining anymore.

It’s an easily applicable technique that anyone could utilise to deceive others – it’s not unthinkable that it can even be used to commit serious fraud.

It’s getting harder to weed them out. This is where someone like Dr Tan Lee Ngee comes in.

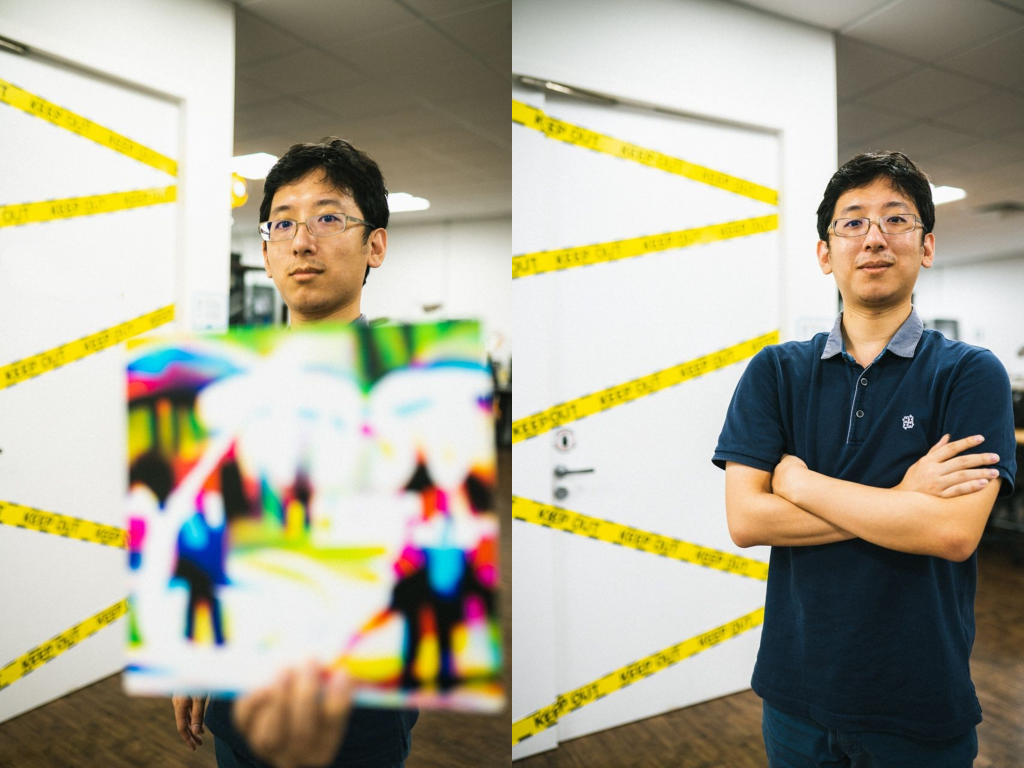

Lee Ngee is a Principal Researcher in the Sensors Division of DSO National Laboratories, Singapore’s largest national defence research and development organisation.

Over here, more than 1600 scientists and engineers are researching and developing cutting-edge technologies to safeguard Singapore’s security and sovereignty. They also keep a close watch on potential threats that challenge Singapore’s defence systems.

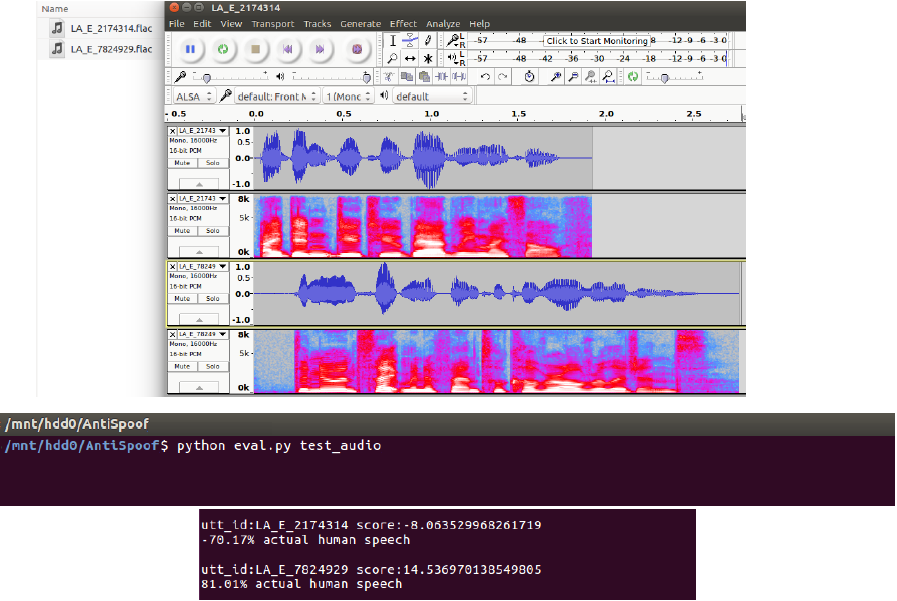

For Lee Ngee, she spearheads research on audio deepfake detection and develops AI-driven tools to help determine the authenticity of audio information. Fighting AI with AI, in a way.

Lee Ngee is part of an invisible war to stop the spread of misinformation and other malicious acts.

I wanted to find out from her two things: How is it done? And, more importantly, what are the implications of her work that we should know?

Swapping Real for the Surreal

Lee Ngee speaks with patience and thoughtfulness that most people yearn for in good mentors. She doesn’t hesitate to explain her highly technical work to me, breaking it down into terms as simplified as possible.

“Sometimes, your familiarity with the speaker helps in knowing whether a piece of audio information is real or fake,” Lee Ngee explained. “Of course, it wouldn’t always be so easy to tell!”

Audio deepfakes do not always involve the manipulation of existing content; it’s possible to create audio deepfake assets from scratch.

Deepfake technology is also able to tweak both audio and visual elements at the same time, challenging those who can’t see through the trickery.

For starters, there’s the viral TikTok video of Tom Cruise supposedly tripping over a carpet, or the altered footage of Ukrainian president Volodymyr Zelenskyy, which was widely shared on Twitter amid the Russian-Ukraine war.

Synthetic media, as such, are not merely pranks. They can have serious consequences.

In the algorithmic world of social media, bad-faith creators can use synthetic media to farm reactions. The simple truth is that many are not immune to these provocative tactics. That includes us.

The Institute of Policy Studies conducted a study in 2020 – it found a gap in the average Singaporean’s ability to detect falsehoods.

Those who were older, and held higher trust in local online-only media, had strong confirmation bias in news-seeking and processing.

They were less capable of critical analysis, like discerning the differences between true and false information. They were also less knowledgeable about how technology can influence the information we receive.

“It’s very hard to reconcile certain people’s views,” remarks Lee Ngee. “Our upbringing, experiences and values about life can propel us to believe, or not believe, in certain things.

“You could explain to someone that what they have learnt is not ‘real’ and support it with strong reasons. But they just wouldn’t believe you.”

The Human Behind the Machine

The development of deepfake technology is both rapid and unprecedented.

“Five years ago, nobody would have believed that audio deepfakes would be a threat,” Lee Ngee says. “The quality just wasn’t there.”

One could easily tell that a speech was made by a machine if they listened hard enough—its intonation wouldn’t be natural.

There would also be abrupt pauses and sudden changes in reading speed. When the end result shows glaring mistakes—like warps or technical noise—it’s obvious.

In recent years, a boom in AI research has led to the rapid progress of deepfake technology.

Researchers and developers have openly shared their code and scripts on the internet for others to verify and replicate their findings. This accessibility has made audio deepfakes even more rampant.

Lee Ngee believes she didn’t choose to work with audio deepfake detection; it chose her.

“I’ve always loved mathematics and science since I was a child,” she recalls. “I wanted to be a researcher. So, I joined DSO and pursued a PhD in electrical engineering under the DSO post-graduate scholarship.”

“In electrical engineering, there’s something called signal processing. This involves the analysis and manipulation of signals like visual or audio data to extract information,” Lee Ngee notes.

“Audio deepfake detection, in a way, also involves the processing of speech signals. That was how my work pivoted gradually.”

So, audio deepfakes can fool people. But can AI itself be fooled? Yes, but not in the way you might think.

Fooling AI

“You can call it ‘optical illusions’ for AI,” says Lee Ngee’s colleague, Jansen, smiling.

Jansen Jarret Sta Maria is an AI scientist in DSO’s Information Division. In a separate interview, he explains to me his line of work, which focuses on making AI robust against adversarial noise.

“Adversarial noise are intentionally designed to prevent AI from functioning properly,” he explains.

What does that mean? Here’s how it works: as a self-driving car moves, it can detect people and objects on the road via sensors. These are fed to the AI in order to prevent any collision.

However, adversarial noise can disrupt this. If it’s planted on a stop sign by, say, a domestic terrorist, it will prevent the vehicle from reading it. The car will keep driving, regardless of what’s ahead on the road.

“Technically, all AI is vulnerable. They can be exploited systematically and be fooled into not performing according to how they were intended to,” Jansen explains. “We make sure AI is resistant to such attacks.”

Jansen is young and, at times, bashful. But none of it hides his enthusiasm. Like his colleague, Jansen was also a DSTA Scholar. He took a liking to mathematics and AI, even if his current job isn’t like anything he’s ever studied in school.

“The work at DSO is fresh and challenging,” Jansen says. “Attackers will always find ways to ‘disable’ an AI. This means we need to constantly update our AI models.”

Jansen believes his mindset changed within the year he started full-time work at DSO. “I used to think AI was extremely powerful and could do anything.

“When I started working on it, I realised it takes a lot of effort to make it successful. AI is not just operated at the click of a button.”

Invisible Warfare

Since AI is deployed to perform a multitude of tasks efficiently, there are different ways where it could be seriously tampered with. This means it’s impossible to defend all AI with the same strategy. Specialists like Jansen can’t afford to be complacent.

“The key is to keep up,” Jansen asserts. “Attackers aren’t kind. They won’t share their strategies online for us to see. We have to continue investigating as much as we can. We also have to make sure our AI models can withstand any virtual storm.”

Lee Ngee feels the same. According to her, we are fighting an “invisible war”, where both attacker and defender engage in an ongoing arms race, with no perceivable end in sight.

It sounds exhausting, but Lee Ngee doesn’t believe her work is done in isolation. She points to the recent policies enacted by major social media companies like Meta and Twitter, both of which have worked on their own form of synthetic media detection and removal.

For Lee Ngee and Jansen, their jobs require them to stay on top of AI advancements. But how about the ordinary boomer or doomscroller?

Perhaps we can rely on something that takes a lot of work, but rewards as much: critical analysis skills. Questioning where your bit of news came from (was it from a verifiable source? Or a forwarded text on WhatsApp? What are their motives?) can go a long way.

Speaking with Lee Ngee and Jansen is both eye-opening and intimidating at the same time.

The tech that they commandeer can measure how prepared we are for an unpredictable future. But I’ll be frank: I’m still daunted by the fact that we can never truly be ready in this ever-changing virtual landscape.

“There is nothing much the public can do, apart from staying vigilant and being able to identify trusted sources and risks,” Jansen says, before adopting an air of assurance: “That’s why we’re here to help.”