Top image: Stephanie Lee / RICE File Photo

Where does the use of Artificial Intelligence (AI) cross the line from convenience to cheating?

Three Nanyang Technological University (NTU) students who took the Health, Disease Outbreaks, and Politics module under Assistant Professor Sabrina Luk thought they understood the basic proposition: don’t use AI to write your assignments. And they maintain they didn’t.

Yet, they’ve found themselves embroiled in accusations of academic fraud by their institution for using AI tools to help sort their references for one particular course assignment.

The students have been upfront about the broken links and typos in their bibliographies caused by AI, but deny using “non-existent sources” in their assignment. Still, two of them may have to live with zeroes on that assignment and a permanent ‘academic fraud’ label on their transcripts.

The third student, Abby*, who wants to remain anonymous, tells RICE that it wasn’t until her grade appeal hearing on June 24—one without Asst Prof Luk present—that a panel agreed her actions didn’t constitute AI abuse. Fortunately, for her, the ‘academic fraud’ label will be wiped from her records.

Even then, it took dogged determination and an appeal for her to clear her name.

The other two students, both awaiting graduation, haven’t been so fortunate. They remain in limbo, with no confirmation if their punishments will be overturned.

This isn’t simply a case of an institution’s miscommunication or opaque judgment-making, but rather a symptom of how fragile our collective understanding of what it really means to use AI responsibly is.

Teachers are understandably guarded, given growing, often untrackable AI plagiarism in student work. But isn’t interpreting something like this as academic fraud going too far?

In a time where everyone is hopping on board the AI train, are schools well-equipped to define and punish AI misuse? Or are they breeding institutional mistrust and punishing transparency?

What Are the Rules?

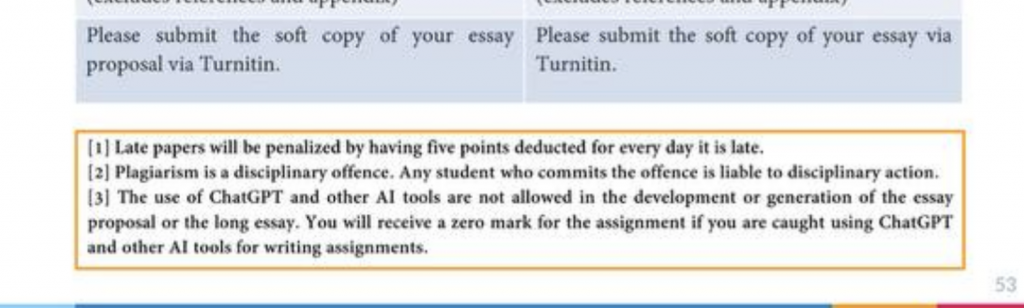

Let’s get to the root of the whole saga: the rule Asst Prof Luk outlined in her class, stating that AI cannot be used for “the development or generation” of their essays.

In NTU’s public statement on the matter, the school maintained that AI was prohibited in the assignment to “assess students’ research skills, and their originality and independent thinking”. The school also told The Straits Times that they had determined that the students used generative AI tools for the assignment.

At face value, it would appear that the students aren’t blameless. They did indeed use AI tools in an assignment where it was prohibited. But if the students did do the research and write the essay themselves, using ChatGPT to sort these citations would seem unlikely to contravene academic integrity—right?

Citation sorters and generators are common practice in academia. NTU itself refers students to platforms such as EndNote to organise their sources.

But even EndNote has used generative AI in its 2025 version. Yes, it’s baffling that AI is even being incorporated into what’s essentially a glorified tab sorter, but should students who use these functions also be subject to punishment?

NTU didn’t respond to RICE’s request for more clarity on their rules surrounding the use of AI-powered citation managers. Instead, they referred RICE to their public statement, which states: “Students are ultimately responsible for the content generated. They must ensure factual accuracy and cite all sources properly.”

It’s evident with these cases that ChatGPT isn’t a reliable way to sort sources—AI’s jankiness is just as well-known as its massive potential.

However, does using it for citation sorting really overstep the bounds that were set out by the professor?

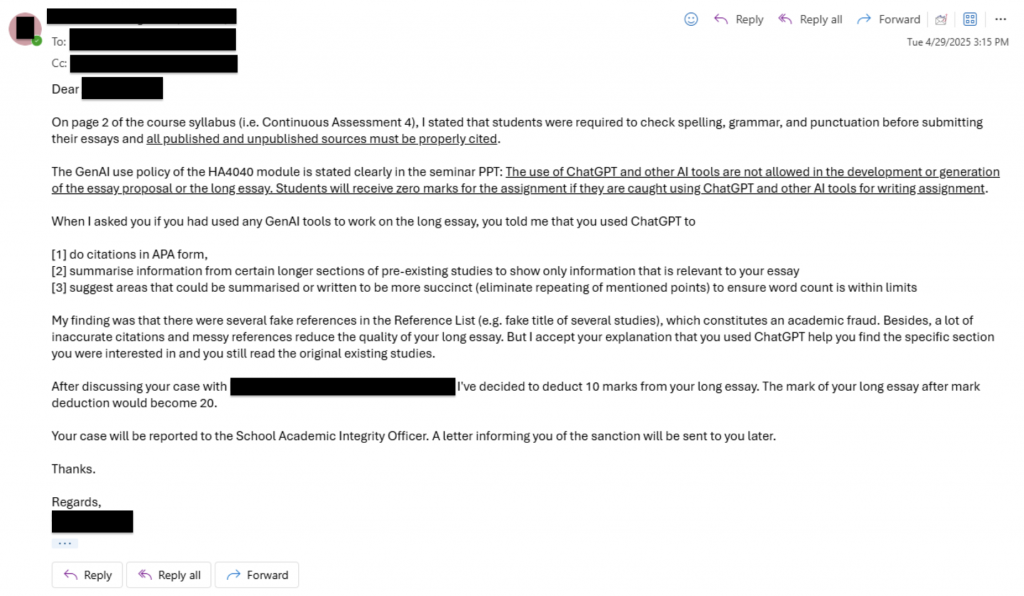

Adam*, a Year 4 student who also wants to stay anonymous, is still unsure where the line lies. In his case, Asst Prof Luk had initially only subtracted ten marks from his essay for mistakes in his bibliography. Later, however, NTU overruled this decision and gave him a zero, he says.

Adam says that from the get-go, he was honest with Asst Prof Luk and the school about using ChatGPT to summarise and locate information in papers. He maintains, however, that he read the original sources afterwards, and Asst Prof Luk accepted his explanation.

Adam also used ChatGPT to organise his references. Despite his attempts to rectify ChatGPT’s mistakes in his bibliography, he had missed a few mislabelled references. These citations were then identified as “fake” by the school.

“Maybe we have different standards towards what constitutes ‘fake’—I really am not sure about the whole situation,” he says.

In the case of Cassie*, the third student involved, she tells RICE her June 25th discussion with faculty members had shifted away from using AI to sort her citations, to her chats with ChatGPT for background research—information she says was never used in her final submission.

The Year 4 student notes that the professors at the meeting agreed that she didn’t use AI to write any part of her paper. However, one reportedly took issue with any use of it for basic research, even if the content wasn’t included in the final essay, leading to no change in the student’s sanction.

If that’s the case, is academic integrity undermined by simply engaging with ChatGPT?

Collateral Damage

The irony is impossible to ignore. The students being punished are the ones who were transparent. They disclosed their use of AI in good faith, believing they had nothing to hide.

For the many students observing the affair, the possibility of such a response to a seemingly menial, routine use of AI doesn’t inspire honesty, but quieter violations.

If the three students weren’t upfront about using AI in their citation process, would NTU’s staff have been able to discern it from genuine typos?

While NTU did not respond to RICE’s questions on how staff make these distinctions, Cassie believes it’s unlikely.

“It’s because I came clean. It’s because I told [Asst Prof Luk] up front that I used ChatGPT to format the bibliography. I never, ever thought that this would be something that is not allowed because it’s a very basic function. Now I’m wondering: Was honesty really the best policy?”

This isn’t to say that students should simply forgo due process, play dumb to justice, or flagrantly use AI in their work. The entire reason why schools are so wary of AI-assisted cheating is precisely because some have abused the technology. Some students are even proud of it.

However, blanket prohibitions and vague rules that leave students guessing don’t really do much to prevent cheating. It might just make finding the bad apples even harder.

The Missing Middle Ground

In the academic sphere—and beyond, as AI continues to worm its way into everything from customer service chatbots to smart watches—we don’t just need clearer demarcations on what counts as misuse. We need to find nuance.

We’re still struggling to separate misuse from misjudgment, and there’s rigidity with little clarity. It’s jarring to see, especially when institutions scurry to make their students as AI-savvy as possible.

These days, students have pre-emptively started keeping records of their Google Docs history and chat logs to prove their innocence. But it seems like even that isn’t good enough. At least, for Adam and Cassie, being able to prove they wrote their essays from scratch hasn’t been enough to overturn their permanent academic fraud records.

And while there’s room for optimism with Abby’s potential acquittal, it still begs the question of why it took such extensive conversations with the school to reach this conclusion.

“My objective of participating in these interviews is mainly to support and lend credibility… an additional account to the dissatisfactory processes of this case,” says Adam, who continues to wait on an appeal he sent to the school in May.

This isn’t about letting students off the hook. There should be boundaries. But boundaries only work when they’re clear, visible, and consistently enforceable.

If academic institutions are serious about navigating the AI era, they can’t afford to govern it through ambiguity and anxiety.

As students like Adam and Cassie face permanent marks on their academic records, others brag about successfully cheating the system. Is academic integrity truly maintained?

*Names have been changed to protect identities